Copyright © 2018-2025 by A.I.

ETH Zurich, Switzerland

The benchmark consists of 78 AI and Computer Vision tests performed by neural networks running on your smartphone. It measures over 180 different aspects of AI performance, including the speed, accuracy, initialization time, etc. Considered neural networks comprise a comprehensive range of architectures allowing to assess the performance and limits of various approaches used to solve different AI tasks. A detailed description of the 26 benchmark sections is provided below.

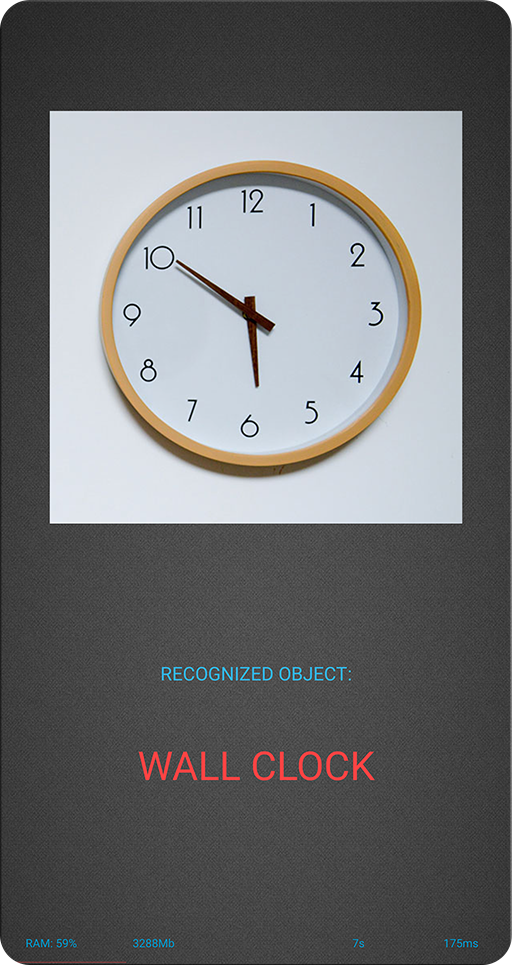

Section 1: Object Recognition / Classification

Neural Network: MobileNet - V2 | INT8 + FP16

Image Resolution: 224 x 224 px

Accuracy on ImageNet: 71.9 %

Paper & Code Links: paper / code

A very small yet already powerful neural network that is able to recognize 1000 different object classes based on a single photo with an accuracy of ~72%. After quantization, its size is less than 4Mb, which together with its low RAM consumption allows to lanch it on absolutely any currently existing smartphone.

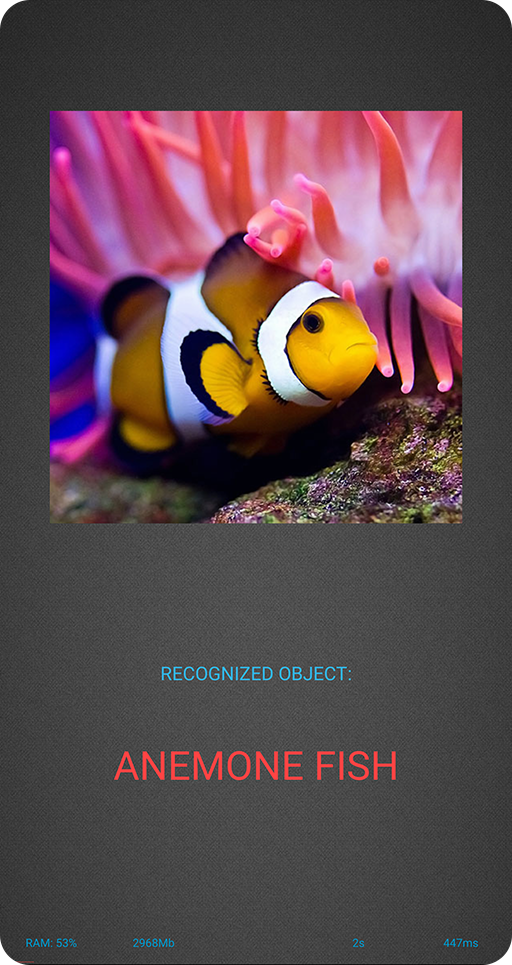

Section 2: Object Recognition / Classification

Neural Network: Inception - V3 | INT8 + FP16

Image Resolution: 346 x 346 px

Accuracy on ImageNet: 78.0 %

Paper & Code Links: paper / code

A different approach for the same task: now significantly more accurate, but at the expense of 6x larger size and increased computational requirements. As a clear bonus — can process images of higher resolutions, which allows for more accurate recognition and smaller object detection.

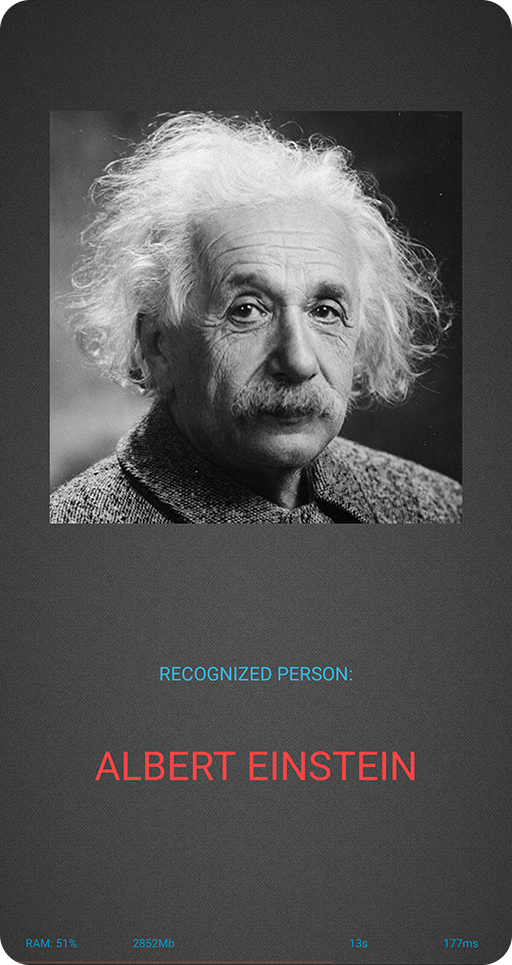

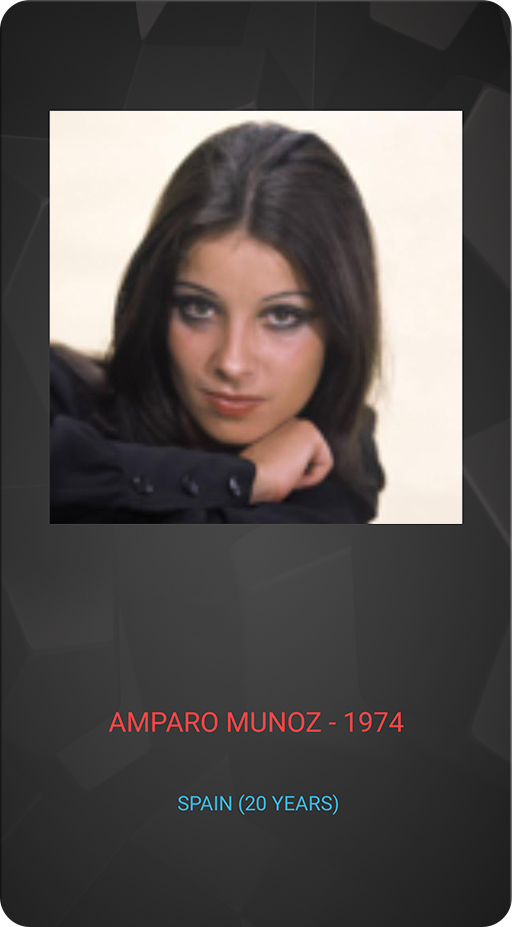

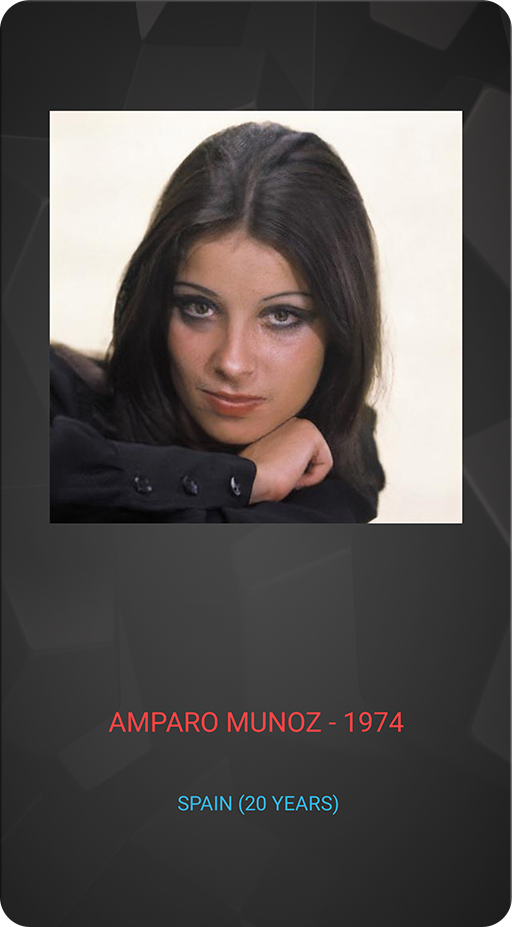

Section 3: Face Recognition

Neural Network: MobileNet - V3 | INT8 + FP16

Image Resolution: 512 x 512 px

Accuracy on ImageNet: 75.2 %

Paper & Code Links: paper / code

This task probably doesn't need an introduction: based on the face photo you want to identify the person. This is done in the following way: for each face image, a neural network produces a small feature vector that encodes the face and is invariant to its scaling, shifts and rotations. Then this vector is used to retrieve the most similar vector (and the respective identity) from your database that contains the same information about hundreds or millions of people.

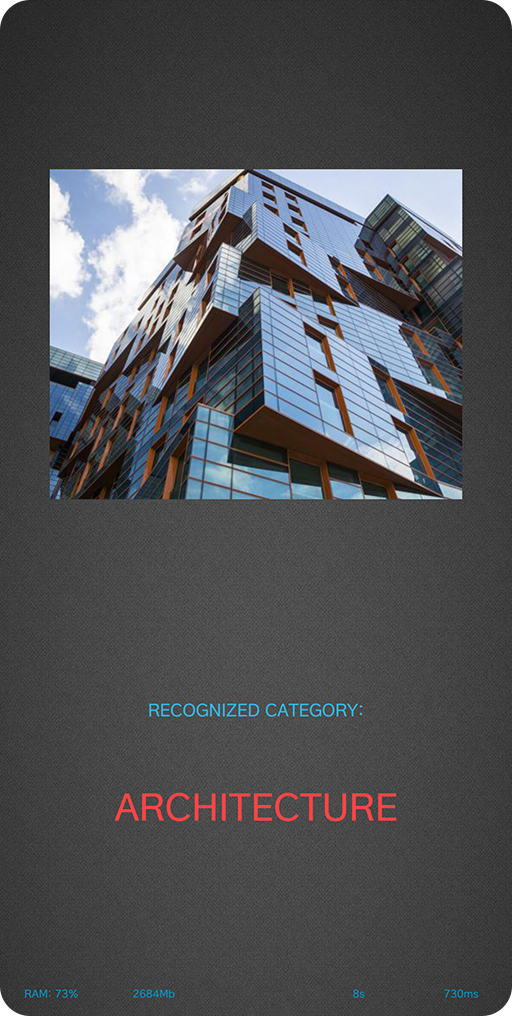

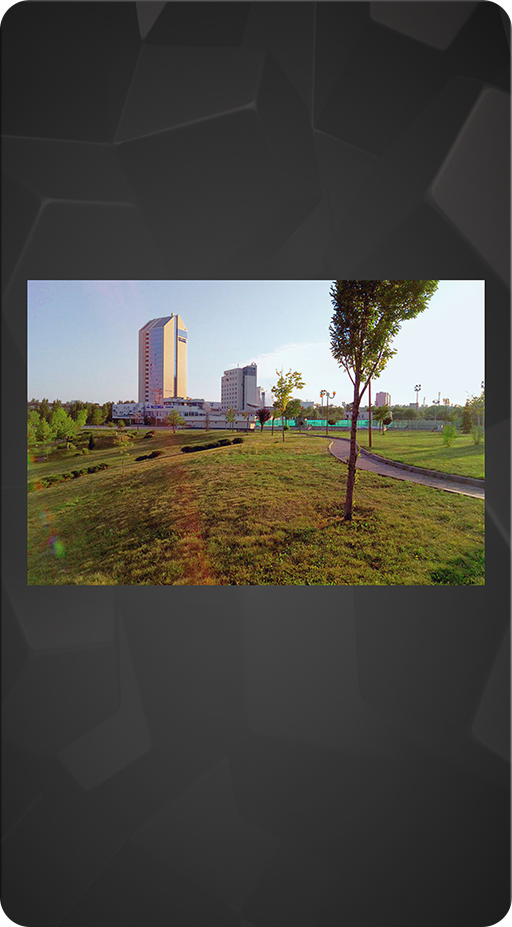

Section 4: Camera Scene Detection

Neural Network: EfficientNet-B4 | INT8 + FP16

Image Resolution: 380 x 380 px

Accuracy on ImageNet: 82.9 %

Paper & Code Links: paper, paper / code

Almost every mobile camera app has now a dedicated "AI mode" analyzing and recognizing the scene that you are capturing using neural networks. In this section, the camera scene detection task is performed using the latest state-of-the-art EfficientNet-B4 model that is able to recognize 30 different photo categories including group portrait, landscape, macro, underwater, food, indoor, stage, fireworks, documents, snow, etc.

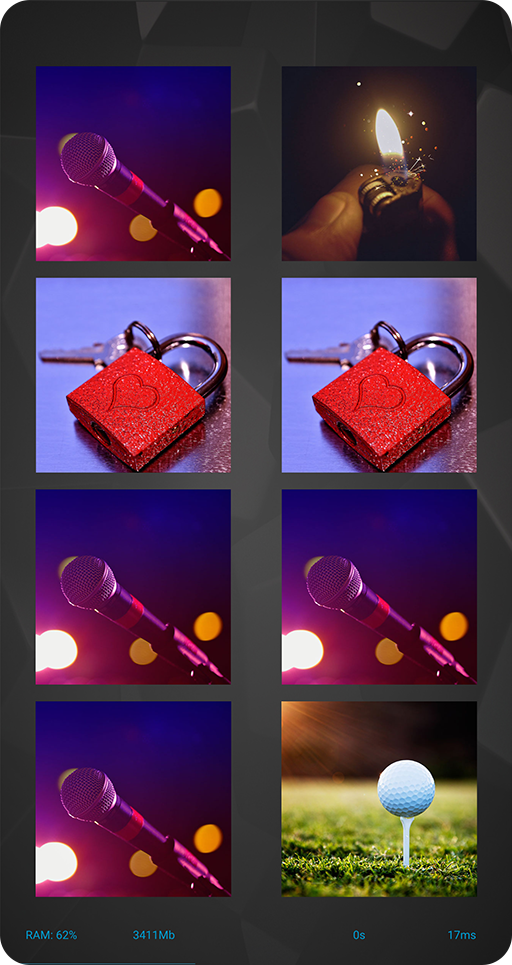

Sections 5-6: Parallel Object Recognition

Neural Network: Inception - V3 | INT8 + FP16

Image Resolution: 346 x 346 px

Accuracy on ImageNet: 78.0 %

Paper & Code Links: paper / code

What happens when several programs try to run their AI models at the same time on your device? Will it be able to accelerate all of them? To answer this question, we are running up to 8 floating-point and quantized Inception-V3 neural networks in parallel on your phone's NPU and DSP, measuring the resulting inference time for each AI model and analyzing the latencies obtained for each setup.

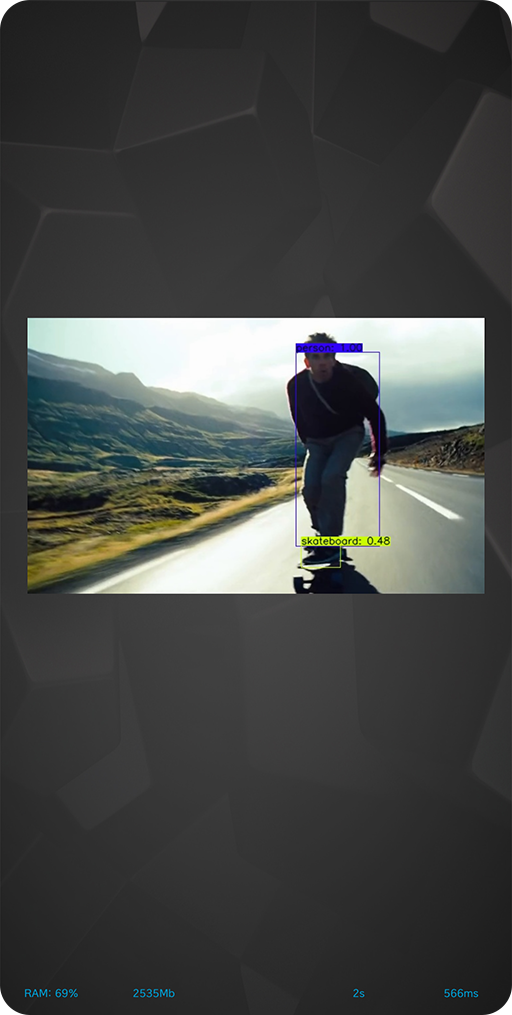

Section 7: Object Detection / Tracking

Neural Network: YOLOv4-Tiny | INT8 + FP16

Image Resolution: 416 x 416 px

Accuracy on MS COCO (mAP): 40.2

Paper & Code Links: paper / code

It's good to recognize what objects are on the image, but would be even better to detect their precise location and being able to track their movements when dealing with video data! For this, one needs a different model trained to perform object tracking. YOLO-V4 is one of the latest architectures available for this task that can detect and track 80 different object categories in real time on mobile devices.

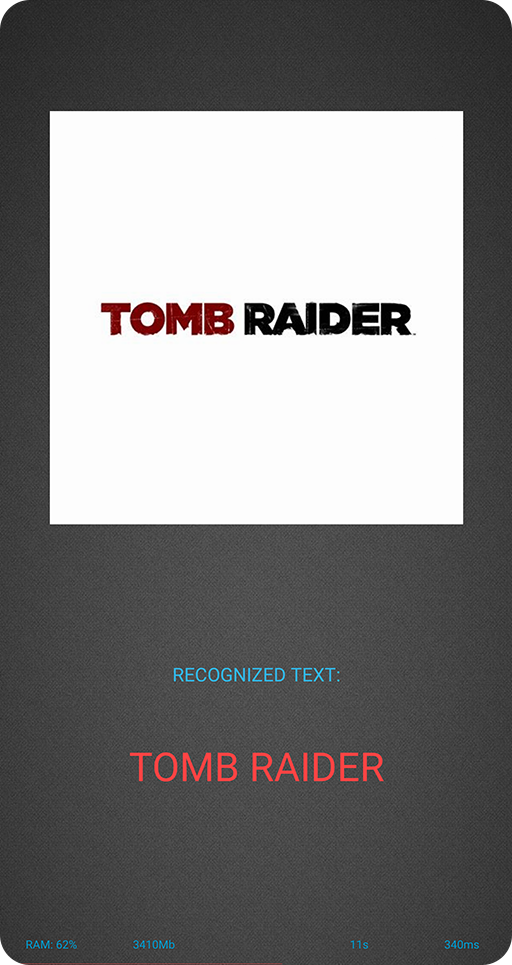

Section 8: Optical Character Recognition

Neural Network: CRNN / Bi-LSTM | INT8 + FP16 + FP32

Image Resolution: 64 x 200 px

IC13 Score: 86.7 %

Paper & Code Links: paper / code

A very standard task performed by a very standard end-to-end trained LSTM-based CRNN model. This neural network consists of two parts: the first one is a well-knows ResNet-34 network that is used here to generate deep features for the input data, while the second one, Bidirectional Static RNN, is taking these features as an input and predicts the actual words / letters on the image.

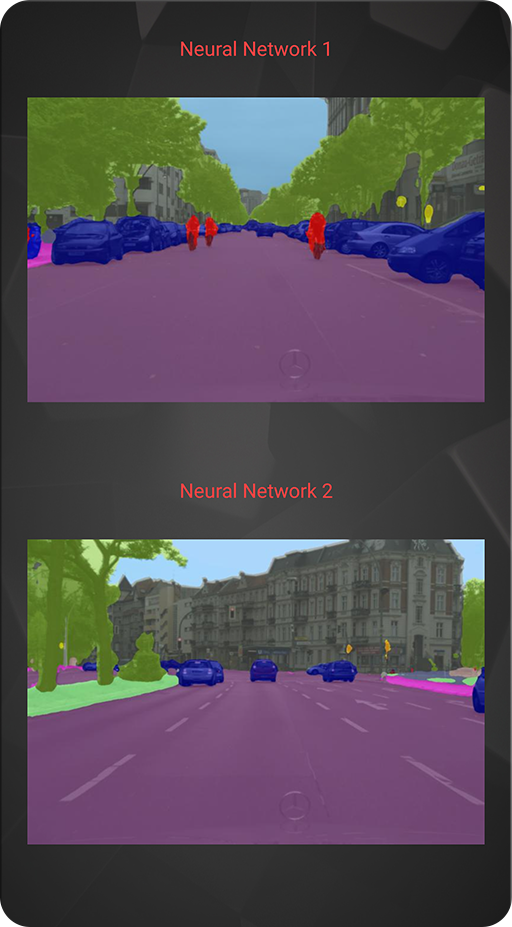

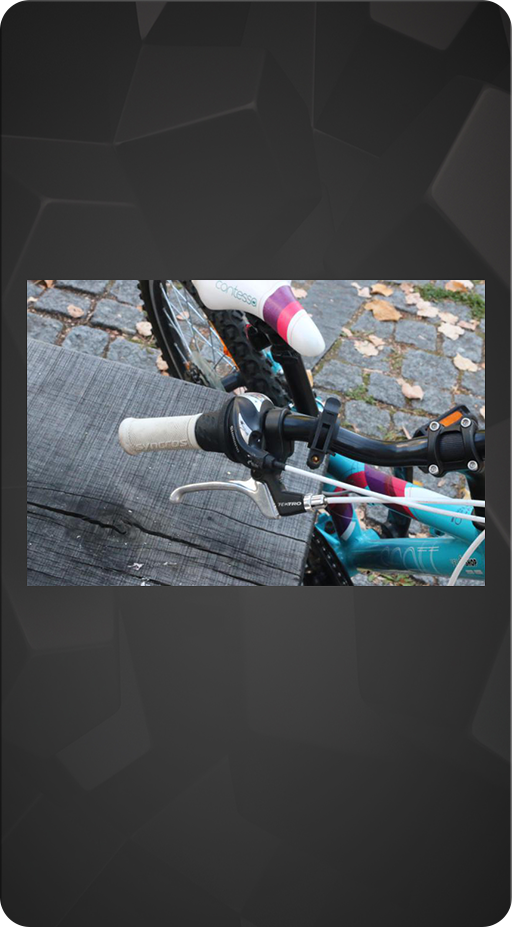

Original

Original

Segmented

Segmented

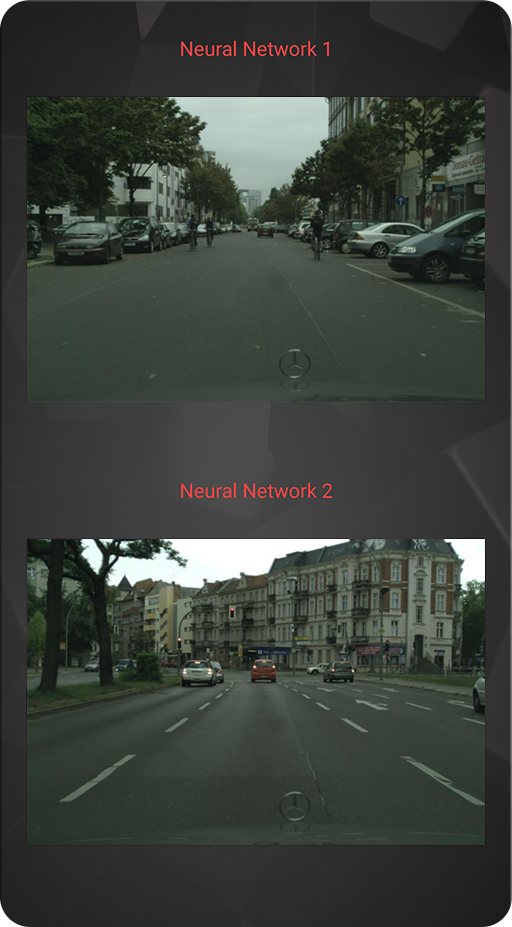

Sections 9-10: Semantic Segmentation

Neural Network: DeepLab-V3+ | INT8 + FP16

Image Resolution: 1024 x 1024 px

CityScapes (mIoU): 82.1 %

Paper & Code Links: paper / code

Running Self-Driving algorithm on your phone? Yes, that's possible too, at least you can perform a substantial part of this task — detect 19 categories of objects (e.g. car, pedestrian, road, sky, etc.) based on the photo from the camera mounted inside the car. On the right image, one can see the results of such pixel-size semantic segmentation (each color correpsonds to each object class) for a very popular DeepLab-V3+ network designed specifically for low-power devices.

Blurred

Blurred

Restored

Restored

Section 11: Image Deblurring

Neural Network: IMDN | INT8 + FP16

Image Resolution: 1024 x 1024 px

Set-5 Score (x3): 34.36 dB

Paper & Code Links: paper / code

Remember taking blurry photos using your phone camera? So, this is the task: make them sharp again. In the simplest case, this kind of distortions is modeled by applying a Gaussian blur to normal uncorrupted images, and then trying to restore them back with a neural network. In this task, the blur is removed by a popular albeit computationally demanding IMDN model (based on the IMDB blocks) that shows nice results on many image restoration problems.

Original

Original

Restored

Restored

Section 12: Image Super-Resolution

Neural Network: ESRGAN | INT8 + FP16

Image Resolution: 512 x 512 px

Set-5 Score (x4): 32.73 dB

Paper & Code Links: paper / code

Have you ever zoomed your photos? Remember artifacts, lack of details and sharpness? Then you know this task from your own experience: make zoomed photos look as good as the original images. In this case, the network is trained to do an equivalent task: to restore the original photo given its downscaled (e.g., by factor of 4) version. Here we consider a very powerful ESRGAN model reconstructing photo-realistic images even when dealing with pictures of very low resolution. Besides the standard loss functions, ESRGAN also uses a bit of magic called the GAN loss...

Original

Original

Restored

Restored

Section 13: Image Super-Resolution

Neural Network: SRGAN | INT8 + FP16

Image Resolution: 1024 x 1024 px

Set-5 Score (x4): 29.40 dB

Paper & Code Links: paper / code

What if we train our neural network using... another neural network? Yes, two networks performing two tasks: network A is trying to solve our super-resolution problem, while network B observes its results, tries to find some flaws there and then penalizes the network A accordingly. Sounds cool? In fact, it is cool: for the SR task, this approach called the GAN training was first introduced in the SRGAN paper. While it has its own issues, the produced results are often looking really amazing.

Original

Original

Restored

Restored

Section 14: Image Denoising

Neural Network: U-Net | INT8 + FP16

Image Resolution: 1024 x 1024 px

ISBI (IoU): 0.9203

Paper & Code Links: paper / code

Noise is arguably the number one issue affecting the quality of mobile photos. You think that your latest flagship cameraphone doesn't suffer from it? Just enable the RAW mode in your camera app and capture a few photos in moderate lighting conditions — the amount of noise on the original unprocessed images would likely impress you. Then how to remove it efficiently? For this, one can use a U-Net shaped CNN trained on paired noisy / noise free images: the resulting neural network would be able to fight noise even on photos taken in the most complex lighting scenarios.

Original

Original

Depth

Depth

Section 15: Depth Estimation

Neural Network: MV3-Depth | INT8 + FP16

Image Resolution: 1024 x 1536 px

ZED dataset (si-RMSE): 0.2836

Paper & Code Links: paper / paper

Yet another task performed by almost any recent mobile camera application: when selecting the portrait or bokeh modes, it tries to estimate the depth of the scene so that the correct amount of blur is applied to each photo region. While one usually needs the information from multiple cameras or ToF sensors for this, it is also possible to predict the depth quite accurately based on one single image. Moreover, the considered MV3-Depth network can perform this task extremely fast, achieving more than 30 FPS on the recent smartphone chipsets.

Original

Original

Enhanced

Enhanced

Sections 16-17: Photo Enhancement

Neural Network: DPED | INT8 + FP16

Image Resolution: 1536 x 2048 / 1024 x 1536 px

DPED PSNR i-Score: 18.11 dB

Paper & Code Links: paper / paper / code

Struggling when looking at photos from your old phone? This can be fixed: a properly trained neural network can make photos even from an ancient iPhone 3GS device looking nice and up-to-date. To achieve this, it observes and learns how to transform photos from a low-quality device into the same photos from a high-end DSLR camera. Of course, there are some obvious limitations for this magic (e.g., the network should be retrained for each new phone model), but the resulting images are looking quite good, especially for old devices.

Original

Original

Bokeh

Bokeh

Section 18: Bokeh Simulation

Neural Network: PyNET+ | INT8 + FP16

Image Resolution: 512 x 1024 px

EBB! PSNR Score: 23.28 dB

Paper & Code Links: paper / code

We already mentioned this well-known and popular AI task — blurring the background like on high-end DSLRs: just select the portrait mode in the camera app to see how it works on your device. In this section, a powerful PyNET+ model processing each input image in parallel at three different scales and utilizing all available NPU computational resources is used to render bokeh effect without the need of multiple cameras: after being pre-trained, it can add an artistic blur to arbitrary images.

RAW

RAW

Restored

Restored

Section 19: Learned Smartphone ISP

Neural Network: PUNET | INT8 + FP16

Image Resolution: 1088 x 1920 px

FujiFilm UltraISP Score: 23.03 dB

Paper & Code Links: paper / paper / code

Let's increase the level of insanity: instead of enhancing various aspects of mobile photos separately, we will now replace the entire RAW image processing pipeline with a single neural network trained to do all the tasks at once including photo demosaicing, denoising, deblurring, super-resolution, tone mapping, etc. This approach not only works well, but is able to compete with traditional ISP systems in terms of the resulting photo quality. However, you will need an extremely powerful NPU for this task as fast large-resolution image processing is needed here.

Section 20: Video Super-Resolution

Neural Network: XLSR | INT8 + FP16

Image Resolution: 1080 x 1920 px

DIV2K Score (x3): 30.11 dB

Paper & Code Links: paper / code

We have already seen the image SR task — so, what's new in the video super-resolution problem? Well, the first and the major change is the tightened computational requirements: now we want to super-resolve an even larger-resolution FullHD videos in real time at more than 30 FPS! Thus, the previously considered SRGAN / ESRGAN models would be too slow here, and we need to use something more efficient and NPU-friendly, like the XLSR neural network.

Sections 21-22: Video Super-Resolution

Neural Network: VSR | INT8 + FP16

Image Resolution: 2160 x 3840 px

DIV2K Score (x3): 29.75 dB

Paper & Code Links: paper

We can go even further — as the resolution of many mobile and TV screens already exceeds 2K, we can try to perform 4K video super-resolution bearing in mind our target frame rate of 30 FPS. In this task, we use a very efficient VSR model designed for mobile and IoT devices, requiring very little computational effort: you can technically run this network even on a 10-year old the Samsung Galaxy S2 phone, though a latency of 12 seconds per frame would probably upset you...

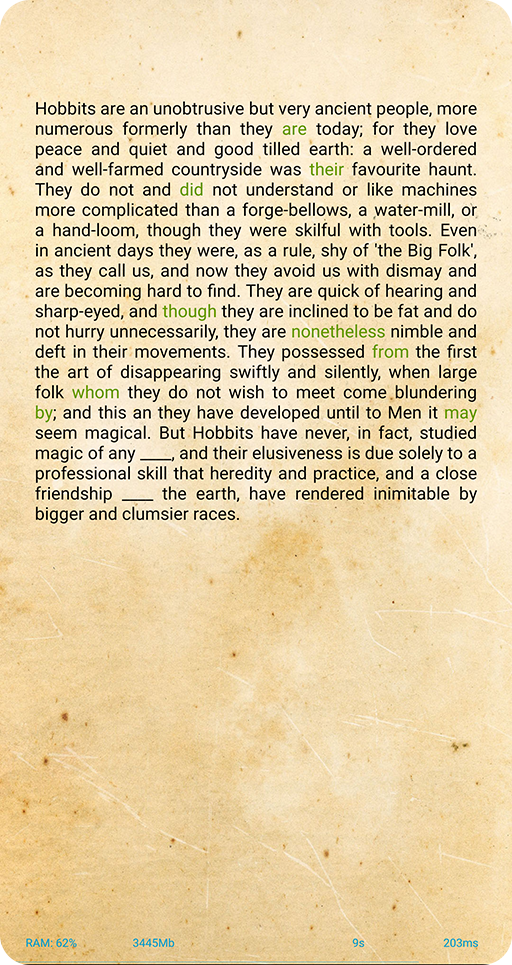

Section 23: Text Completion

Neural Network: Static RNN / LSTM | INT8 + FP16 + FP32

Embeddings Size: 32 x 500

Layers | LSTM Units: 4 | 512

Paper & Code Links: paper / code

Yet another standard deep learning problem on smartphones — providing text suggestions based on what the user types. In this task, we consider a variation of this NLP task: a simple static LSTM model learns to fill in the gaps in the text using sentence semantics inferred from the provided Word2vec word embeddings.

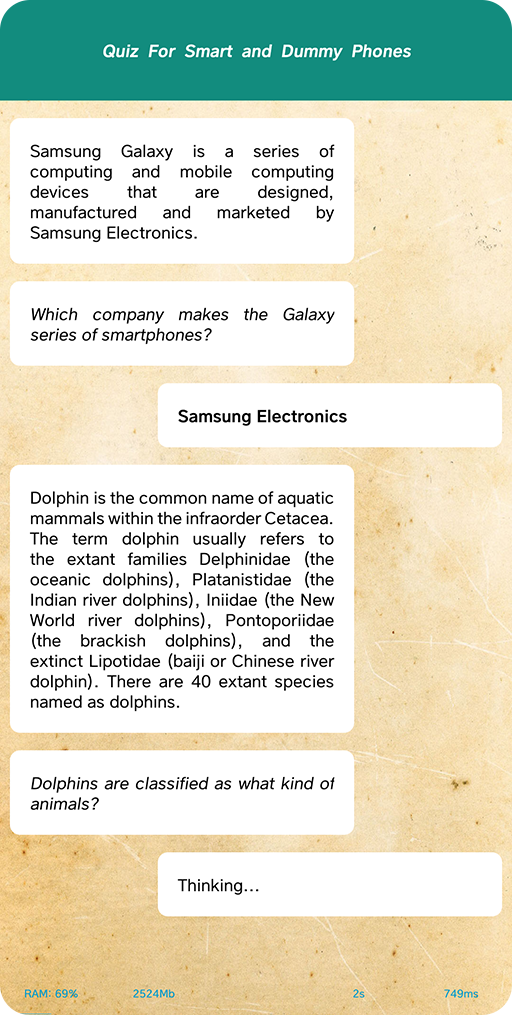

Sections 24: Question Answering

Neural Network: MobileBERT | INT8

Embeddings Size: 384

SQuAD V2.0 Score: 79.2

Paper & Code Links: paper / code

Just 15 years ago, it was hard to imagine that one would be able to talk to a phone and ask it questions about some random things. Was even harder to imagine that the phone would be easily understanding your requests and providing you with the correct answers. Yet, this is the reality — with the MobileBERT model, it is possible to ask this network arbitrary questions, and it would be looking for the answers in the specified text databases. What really impresses is its performance: the responses are very accurate and come almost instantaneously.

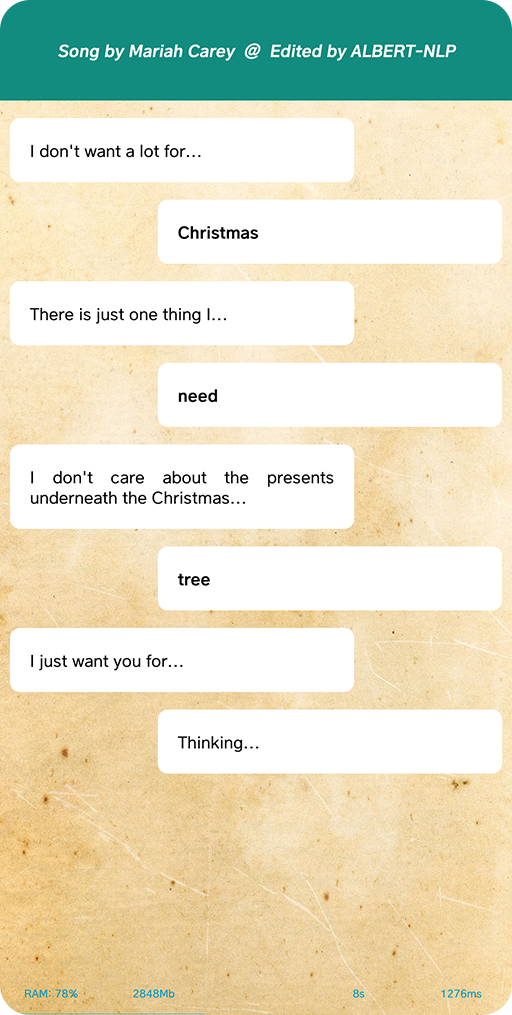

Section 25: Text Completion

Neural Network: ALBERT | FP16

Embeddings Size: 128

SQuAD V2.0 Score: 80.0

Paper & Code Links: paper / code

ALBERT is a further extension of the original BERT architecture: being lighter, smaller (just 45 MB for FP32 model) and faster, this model is a good choice for mobile devices. One can use this neural network for many different NLP tasks including question answering, filling in the gaps in the text, intelligent reply suggestions, or even songs completion that can be seen in this test.

Section 26: Memory Limits

Neural Network: ResNet | INT8 + FP16

Image Resolution: 9 MP

# Parameters: 372.803

Paper & Code Links: paper / code

It's not all about the speed — there is a little use of a powerful mobile NPU if it cannot process high-resolution images. In the past sections, we have already seen that one might need neural networks for photo denosing, super-resolution, dehazing, ISP processing or bokeh effect rendering, and in all these tasks we are dealing with at least 8-12MP images. This test is aimed at finding the limits of your device: how big images can it handle when using a common ResNet network?

Copyright © 2018-2025 by A.I.

ETH Zurich, Switzerland